Jetson Generative AI – LLaMA Factory

LLaMA Factory provides a unified framework for fine-tuning large language models with an intuitive web interface. This powerful tool brings professional model training capabilities to Jetson devices, enabling you to customize LLMs for your specific use cases with optimized performance for edge deployment.

In this article, you’ll learn how to run LLaMA Factory on Jetson Orin for efficient LLM fine-tuning and deployment.

Features

- Support for multiple LLM architectures including LLaMA, Qwen, ChatGLM, and more

- Multiple training stages: Supervised Fine-Tuning, Reward

- Modeling, PPO, DPO, KTO, Pre-Training

- Three fine-tuning methods: full, freeze, and lora

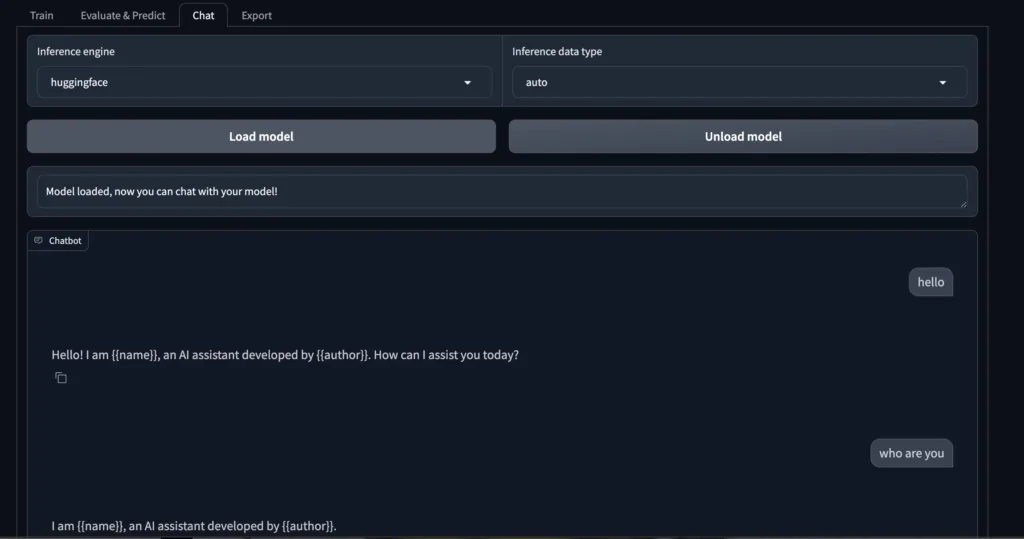

- Gradio-based web UI with Train, Evaluate & Predict, Chat, and

- Export tabs

- Built-in dataset support with preview functionality

- Integrated chat interface for testing models

- Real-time training loss visualization

- Advanced configurations for quantization, LoRA, RLHF, and more

- Model evaluation with customizable generation parameters

Requirements

|

Hardware / Software

|

Notes

|

|---|---|

|

Jetson AGX Orin with ≥ 32 GB RAM

|

64GB recommended for larger models |

|

JetPack 6.0+

|

For CUDA 12.x support |

|

NVMe SSD

|

Essential for model storage and caching |

|

Hugging Face token

|

Required for accessing gated models |

|

~50 GB free storage

|

For models and training checkpoints |

Step-by-Step Setup

1. Create necessary directories

2. Set your Hugging Face token

Replace your_hf_token_here with your actual token from https://huggingface.co/settings/tokens .

3. Launch LLaMA Factory

4. Access the Web UI

Once the container starts, you’ll see:

Running on local URL: http://0.0.0.0:7860

Local access: Open http://localhost:7860 in your browser

Remote access: Use http://<jetson-ip>:7860

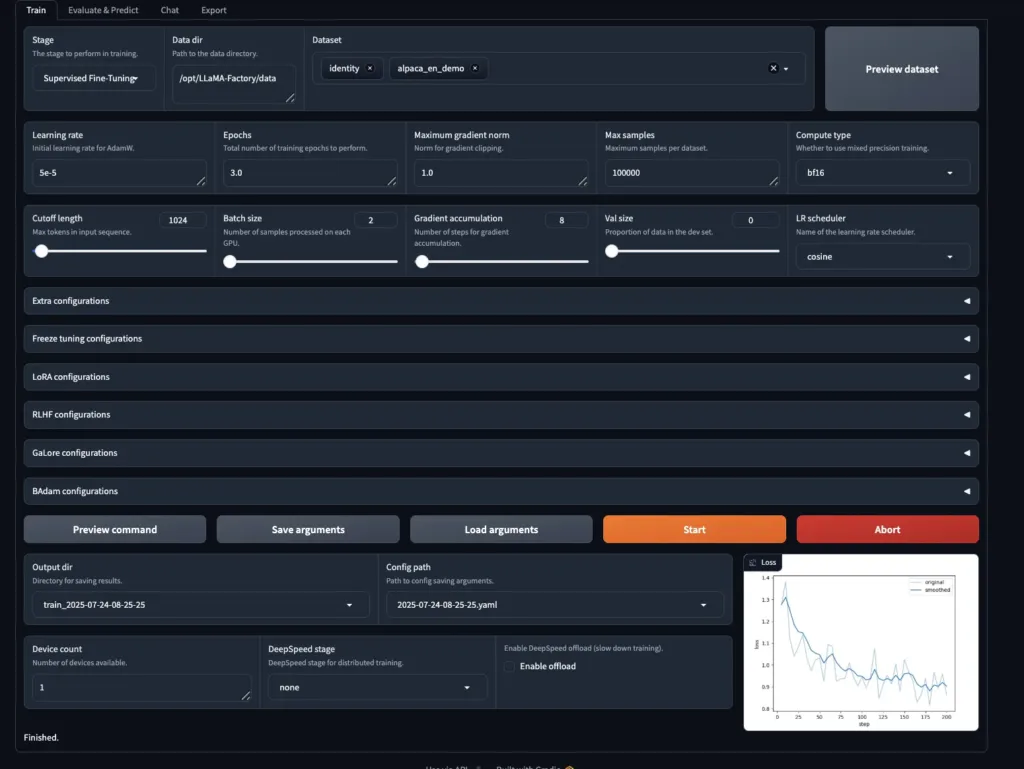

5. Configure your fine-tuning

In the Train tab:

1.Select Training Stage:

- Supervised Fine-Tuning (most common)

- Reward Modeling

- PPO (Proximal Policy Optimization)

- DPO (Direct Preference Optimization)

- KTO

- Pre-Training

2.Choose Finetuning Method:

- lora – Low-rank adaptation, best for memory efficiency

- freeze – Freezes base model layers

- full – Full parameter fine-tuning

3.Configure Data:

- Data directory: /opt/LLaMA-Factory/data

- Select dataset from dropdown

- Use “Preview dataset” to verify data format

4.Set Training Parameters:

- Cutoff length: 1024 (max tokens in input sequence)

- Max samples: 100000

- Batch size: 2

- Learning rate: 5e-5 (in Advanced configurations)

- Epochs: 3.0 (in Advanced configurations)

5.Advanced Configurations (expandable sections):

- Quantization bit (none/bitsandbytes)

- Extra configurations

- Freeze tuning configurations

- LoRA configurations

- RLHF configurations

- GaLore configurations

- BAdam configurations

6.Start Training:

- Click “Preview command” to verify settings

- Click “Start” to begin training

- Monitor real-time loss graph

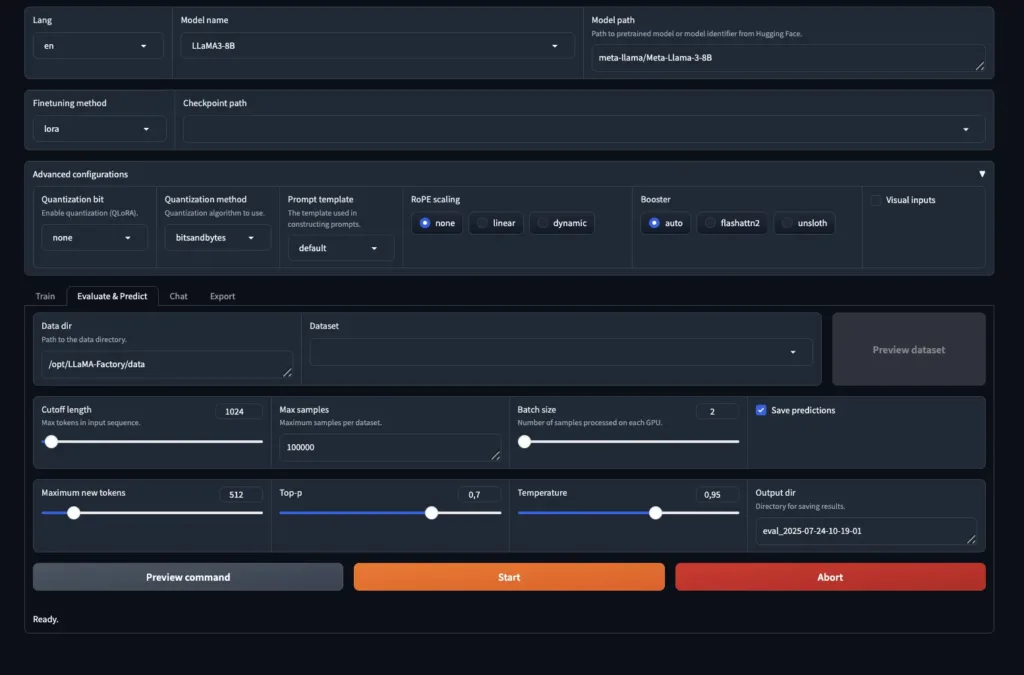

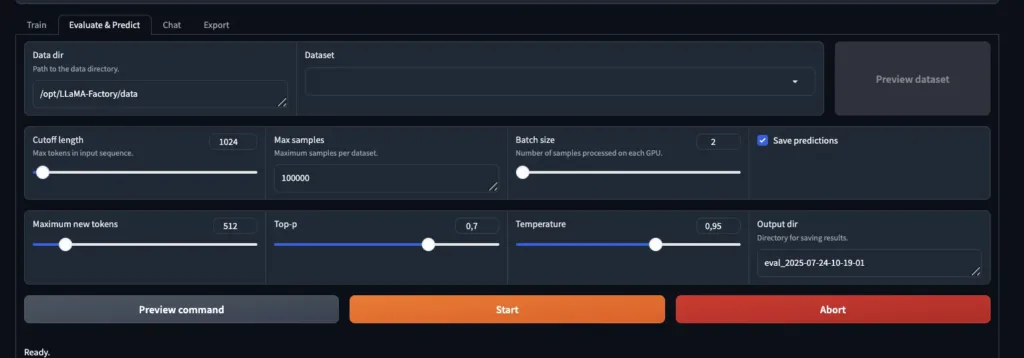

6. Evaluate your model

Switch to the Evaluate & Predict tab to assess model performance:

1.Configure evaluation settings:

- Data directory and dataset (same as training)

- Cutoff length: 1024

- Max samples: 100000

- Batch size: 2

2.Set generation parameters:

- Maximum new tokens: 512

- Top-p: 0.7

- Temperature: 0.95

3.Run evaluation:

- Enable “Save predictions” to store results

- Click “Start” to begin evaluation

- Results saved to timestamped output directory

7. Test your model interactively

Navigate to the Chat tab:

1.Load your model:

- Click “Load model” button

- Select inference engine: huggingface

- Choose inference data type: auto

2.Chat with your model:

- Type messages in the chat interface

- Model responds in real-time

- Test both base and fine-tuned versions

3.Unload model when switching between models

8. Export your model

Use the Export tab to save your fine-tuned model in various formats for deployment. This allows you to use your model outside of LLaMA Factory in production environments.

Training Method Guide

| Method | Memory Usage | Training Speed | Use Case |

|---|---|---|---|

| LoRA | Low | Fast | Recommended for most Jetson deployments |

| Freeze | Medium | Medium | When you need to preserve base model behavior |

| Full | High | Slow | Small models only (≤1.5B parameters) |

Troubleshooting

| Issue | Fix |

|---|---|

| Out of memory during training | Reduce batch size to 1-2, use LoRA method, or use smaller model |

| Slow model download | Models are cached in /mnt/nvme/cache/huggingface, be patient on first run |

| Connection refused | Ensure port 7860 is not blocked by firewall |

| Training won’t start | Check dataset format matches the selected template |

| GPU not utilized | Verify with tegrastats and ensure --runtime nvidia is set |

For more information about LLaMA Factory features and supported models, visit the LLaMA Factory repository.