Recommended Products

Latest Posts ⚡

How to Run MLC LLM on Jetson AGX Thor?

What is MLC LLM ?

MLC LLM (Machine Learning Compilation for Large Language Models) is an open-source project designed to make large language models (LLMs) run efficiently across different hardware platforms. Its main goal is to optimize performance and reduce energy consumption, enabling AI applications to run not only in the cloud but also on edge devices.

NVIDIA’s next-generation Jetson AGX Thor platform delivers powerful computing capabilities for robotics, autonomous systems, and AI-driven applications. By leveraging MLC LLM on Jetson AGX Thor, large language models can be optimized to run in real time, supporting tasks such as natural language processing, decision-making, and human-like interaction with higher efficiency.

In short, MLC LLM on Jetson AGX Thor acts as a bridge that brings high-performance large language model capabilities to edge devices.

Requirements

- JetPack 7 (Learn more about JetPack)

- CUDA 13

- At least 25 GB of free disk space (Only for the MLC LLM image, not for the models.)

- A stable and fast internet connection

How to use MLC LLM ?

First, install the Docker image on your computer:

If you’d like to explore the available images or replace them with newer ones, you can visit the GitHub Container Registry.

Once inside the container, find the model you want to download from Hugging Face.

Use the hf download command inside the container to download the model.

For example:

In the next step, provide the folder where you downloaded the model and run the command below.

This command converts the model’s original Hugging Face weights (in safetensor format) into the optimized MLC LLM format. During conversion, the weights are quantized (e.g., to q4bf16_1), which reduces memory usage and improves runtime efficiency on GPU without heavily sacrificing accuracy.

In short, mlc_llm convert_weight takes the raw model checkpoint and transforms it into a format that can be directly executed by the MLC runtime on your target device (e.g., Jetson AGX Thor with CUDA).

⚠️ Warning: In the command, replace in snapshots// with the actual folder name you see inside the snapshots directory (e.g., aeb13307a71acd8fe81861d94ad54ab689df…). This folder contains the real model files such as config.json, tokenizer.json, and model.safetensors, which are required for the mlc_llm convert_weight command to work.

In the next step , gen_config generates the configuration files needed to run the converted model in MLC. It defines the conversation template (e.g., Qwen format), context length, batch size, and other runtime parameters. In short, it makes the weight-converted model fully executable in the MLC runtime.

⚠️ Note: The “Not found” messages for files like tokenizer.model or added_tokens.json are not errors. These files are optional and not required by all models. As long as tokenizer.json, vocab.json, and merges.txt are found and copied, the model configuration is complete and ready to run.

Now that the configuration is ready, we can move on to the compilation step. In this stage, the model is compiled into a CUDA-optimized shared library (.so file), which enables fast execution on the GPU.

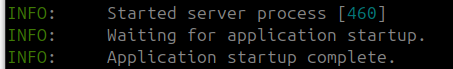

With the compilation complete, the final step is to serve the model so it can handle inference requests. The mlc_llm serve command launches an HTTP server that exposes the model as an API endpoint, making it accessible for testing or integration into applications.

If you see this output, it means the model has been successfully compiled and serving .

You can test it with this curl request ;

Which Jetson should I choose for my LLM model?

Below, you can find the RAM requirements of the most popular LLM models along with Jetson recommendations that meet the minimum specifications to run them. You can choose the one that best fits your needs.

| Model | Parameters | Quantization | Required RAM (GB) | Recommended Minimum Jetson |

|---|---|---|---|---|

| deepseek-ai Deepseek-R1 Base | 684B | Dynamic-1.58-bit | 162.11 | Not supported (≥128 GB and above) |

| deepseek-ai Deepseek-R1 Distill-Qwen-1.5B | 1.5B | Q4_K_M | 0.90 | Jetson Orin Nano 4 GB, Jetson Nano 4 GB |

| deepseek-ai Deepseek-R1 Distill-Qwen-7B | 7B | Q5_K_M | 5.25 | Jetson Orin Nano 8 GB, Jetson Orin NX 8 GB, Jetson Xavier NX 8 GB |

| mistralai Mixtral 8x22B-Instruct-v0.1 | 22B | Q4_K_M | 13.20 | Jetson Orin NX 16 GB, Jetson AGX Orin 32 GB, Jetson AGX Xavier 32 GB |

| mistralai Mathstral 7B-v0.1 | 7B | Q5_K_M | 5.25 | Jetson Orin Nano 8 GB, Jetson Orin NX 8 GB, Jetson Xavier NX 8 GB |

| google gemma-3 12b-it | 12B | Q4_K_M | 7.20 | Jetson Orin NX 8 GB, Jetson Orin Nano 8 GB, Jetson Xavier NX 8 GB |

| meta-llama Llama-3.1 70B-Instruct | 70B | Q5_K_M | 52.50 | Jetson AGX Orin 64 GB, Jetson AGX Xavier 64 GB, Jetson AGX Thor (T5000) 128 GB |