How to Run vLLM on Jetson AGX Thor?

What is vLLM and Why Does It Matter on Jetson AGX Thor?

vLLM is an open-source inference engine designed to run large language models (LLMs) with exceptional efficiency. Thanks to its innovative PagedAttention architecture, vLLM delivers both high throughput and low latency making it possible to deploy advanced AI models in real-time applications.

On the other side, NVIDIA Jetson AGX Thor is a next-generation edge AI platform built for robotics, autonomous machines, and industrial systems. With its immense compute power and AI acceleration, Thor is the perfect hardware to unlock the full potential of LLMs at the edge.

When combined, vLLM on Jetson AGX Thor enables:

- Real-time LLM services (chatbots, assistants, summarization, translation)

- Vision + Language use cases (explaining camera input instantly)

- On-device inference with ultra-low latency and stronger data privacy

- Reduced reliance on cloud resources, with better energy efficiency

In short, vLLM provides the software intelligence, Thor provides the hardware muscle together they make cutting-edge LLM experiences possible directly on the device.

Installing Process

First, download the following Triton Inference Server container image.

This image comes with vLLM version 0.9.2 pre-installed. The tag 25.08 refers to August 2025.

If you’d like to update to a newer version in the future, you can always visit the NVIDIA NGC Catalog to find the latest container releases.

You can verify the installed vLLM version directly with Python.

Next, you’ll need to create an account on Hugging Face , generate an access token, and log in with it.

This token will allow the container to securely download and run models directly from Hugging Face.

Once your environment is ready, you can launch the vLLM API server using the following command:

Here’s what each parameter does:

- –model → specifies which model to load (in this case, Llama-3.1-8B-Instruct from Hugging Face).

- –tensor-parallel-size 1 → runs the model on a single GPU. If you have multiple GPUs, you can increase this value.

- –gpu-memory-utilization 0.90 → tells vLLM to use up to 90% of available GPU memory. Adjust this if you run into memory errors.

- –max-model-len 8192 → sets the maximum context length (in tokens) for the model.

- –dtype float16 → runs the model in FP16 precision, which is more efficient on Jetson AGX Thor.

⚠️ Heads-up: If you encounter ;

It usually means the engine couldn’t reserve enough GPU memory. Try lowering the GPU memory utilization. For example try with –gpu-memory-utilization 0.75 .

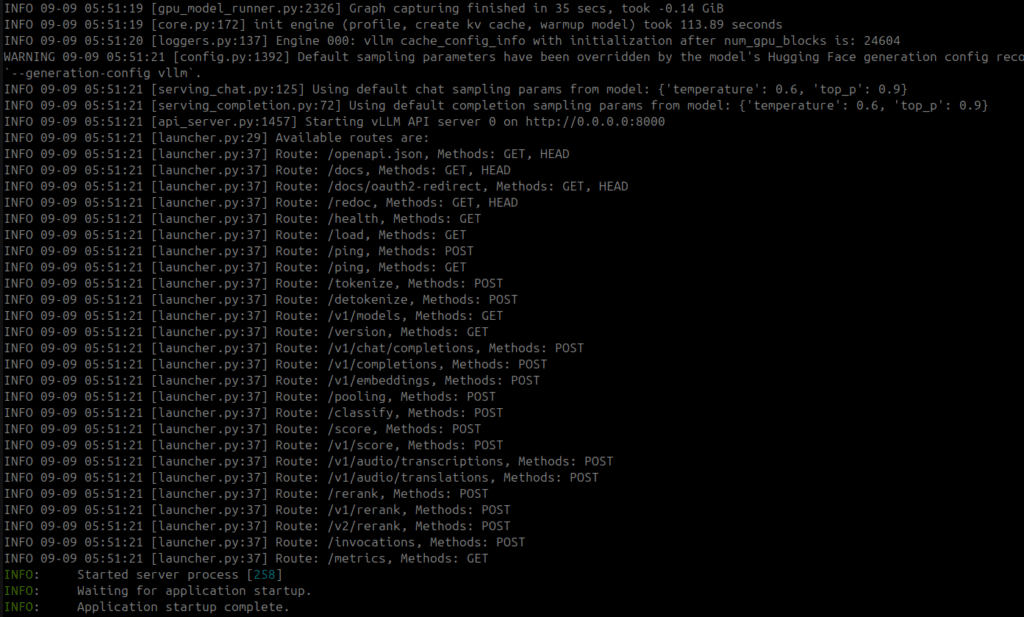

If you see a message like:

it means that vLLM is now serving on port 8000 and ready to accept requests.

At this point, you can start testing it with a simple curl command. For example:

Which Jetson should I choose for my LLM model?

Below, you can find the RAM requirements of the most popular LLM models along with Jetson recommendations that meet the minimum specifications to run them. You can choose the one that best fits your needs.

| Model | Parameters | Quantization | Required RAM (GB) | Recommended Minimum Jetson |

|---|---|---|---|---|

| deepseek-ai Deepseek-R1 Base | 684B | Dynamic-1.58-bit | 162.11 | Not supported (≥128 GB and above) |

| deepseek-ai Deepseek-R1 Distill-Qwen-1.5B | 1.5B | Q4_K_M | 0.90 | Jetson Orin Nano 4 GB, Jetson Nano 4 GB |

| deepseek-ai Deepseek-R1 Distill-Qwen-7B | 7B | Q5_K_M | 5.25 | Jetson Orin Nano 8 GB, Jetson Orin NX 8 GB, Jetson Xavier NX 8 GB |

| mistralai Mixtral 8x22B-Instruct-v0.1 | 22B | Q4_K_M | 13.20 | Jetson Orin NX 16 GB, Jetson AGX Orin 32 GB, Jetson AGX Xavier 32 GB |

| mistralai Mathstral 7B-v0.1 | 7B | Q5_K_M | 5.25 | Jetson Orin Nano 8 GB, Jetson Orin NX 8 GB, Jetson Xavier NX 8 GB |

| google gemma-3 12b-it | 12B | Q4_K_M | 7.20 | Jetson Orin NX 8 GB, Jetson Orin Nano 8 GB, Jetson Xavier NX 8 GB |

| meta-llama Llama-3.1 70B-Instruct | 70B | Q5_K_M | 52.50 | Jetson AGX Orin 64 GB, Jetson AGX Xavier 64 GB, Jetson AGX Thor (T5000) 128 GB |