Recommended Products

Latest Posts ⚡

How to Run Llama.cpp Server on Jetson AGX Thor?

Llama.cpp Server on Jetson AGX Thor: Unlocking Edge AI with Large Language Models

Llama.cpp Server is a lightweight, high-performance runtime for large language models (LLMs), designed to run efficiently on both CPU and GPU. Built in C++, it eliminates unnecessary overhead and delivers deep hardware-level optimizations. By supporting the GGUF model format, it allows for quantization, drastically reducing memory requirements while maintaining accuracy. Through its REST API, Llama.cpp Server can be seamlessly integrated into applications, enabling developers to bring advanced LLM capabilities directly to devices—without relying on the cloud.

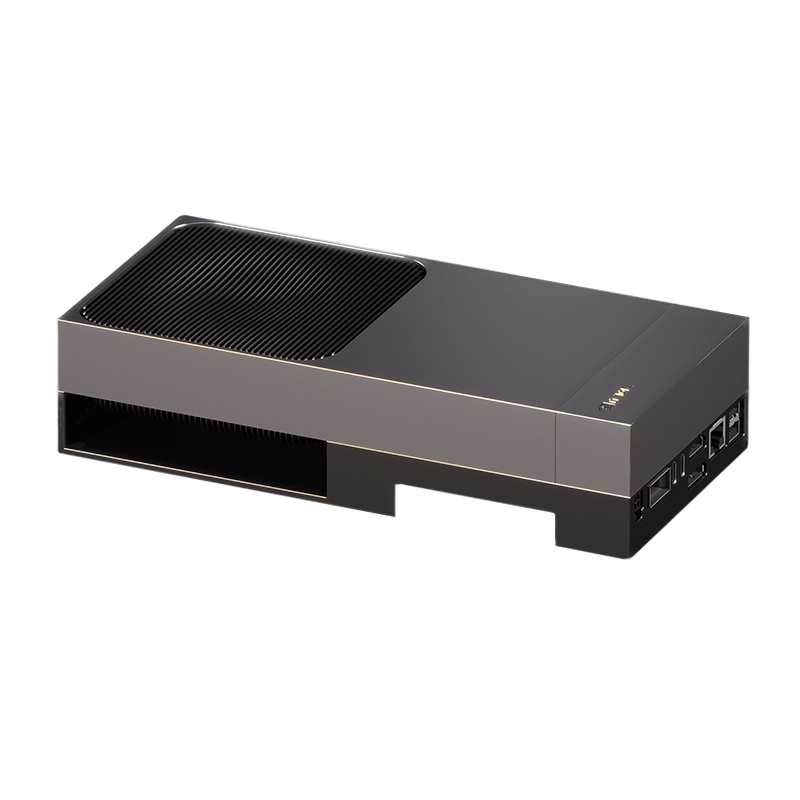

When deployed on NVIDIA Jetson AGX Thor, the advantages become even more compelling:

- GPU acceleration with CUDA ensures that the Thor’s compute power is fully utilized, bringing real-time inference to the edge.

- Optimized for edge AI use cases such as robotics, autonomous systems, and industrial automation, it provides ultra-low latency decision-making.

- Resource efficiency via quantization makes it possible to run models from 7B up to 13B parameters within the limited memory budgets typical of embedded devices.

By combining Llama.cpp Server with Jetson AGX Thor, organizations gain a powerful platform for on-device AI that is private, fast, and cost-effective. No data needs to leave the device, latency is minimized, and the system remains fully adaptable to both prototyping and production scenarios. Supported by an open-source ecosystem, this pairing represents a breakthrough for deploying large language models securely and efficiently at the edge.

Requirements

- JetPack 7 (Learn more about JetPack)

- CUDA 13

- At least 10 GB of free disk space (Only for the Llama Server image, not for the models.)

- A stable and fast internet connection

How to use Llama.cpp Server ?

Firstly download the image ;

Then, download the model from Hugging Face. If the model requires access, log in with your token by running:

Then, install the required Python dependencies with the following command:

This command set downloads the NVIDIA NVPL local repository package, installs it, adds the signing key to the system, and then installs the NVPL library via apt-get.

This command takes the Qwen2.5-VL-3B-Instruct model downloaded from Hugging Face (inside the snapshot folder identified by ), and uses the convert_hf_to_gguf.py tool to convert the Hugging Face weights (safetensors/PyTorch) into GGUF format, saving the output as /data/models/Qwen3-4B-Instruct-2507-f16.gguf.

This command takes the full-precision GGUF model (Qwen3-4B-Instruct-2507-f16.gguf) and runs it through llama-quantize to produce a quantized version (Qwen3-4B-Instruct-2507-q4_k_m.gguf) using the q4_k_m quantization method.

- Input file: /data/models/Qwen3-4B-Instruct-2507-f16.gguf (the FP16 model converted from Hugging Face).

- Output file: /data/models/Qwen3-4B-Instruct-2507-q4_k_m.gguf (smaller, quantized model).

- Quantization type: q4_k_m → a 4-bit quantization scheme optimized for speed and memory efficiency.

This command launches the llama.cpp server so the quantized model can be served via an HTTP API.

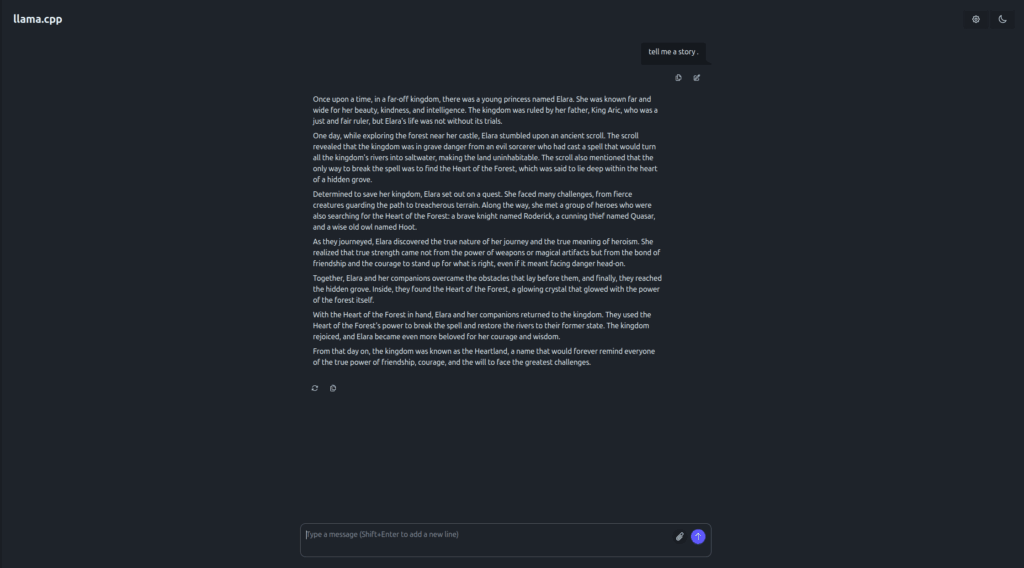

And thats it ! You can start chatting .