How to Run Ollama on Jetson AGX Thor with OpenwebUI?

What is Ollama?

Ollama is a lightweight and flexible platform that allows you to run large language models (LLMs) directly on your own device. When running on powerful AI hardware such as the NVIDIA Jetson AGX Thor, it provides a local, fast, and secure experience without the need for cloud-based solutions.

Thanks to the high processing power of Jetson AGX Thor, Ollama:

- Runs LLMs locally → Can be used even without an internet connection.

- Utilizes hardware acceleration → Leverages GPU power to generate faster responses.

- Ensures data privacy → All processing happens on-device, so sensitive data never leaves the system.

- Offers flexibility → Different models can be downloaded, customized, and tested.

In short, Ollama leverages the hardware advantages of AGX Jetson Thor to make AI applications more accessible, portable, and secure.

Requirements for AGX Thor

- JetPack 7 must be installed

- Stable high-speed internet connection

- At least 15 GB of free disk space (excluding model storage for Ollama itself)

Installation Process

First, we create a folder to mount into the container.

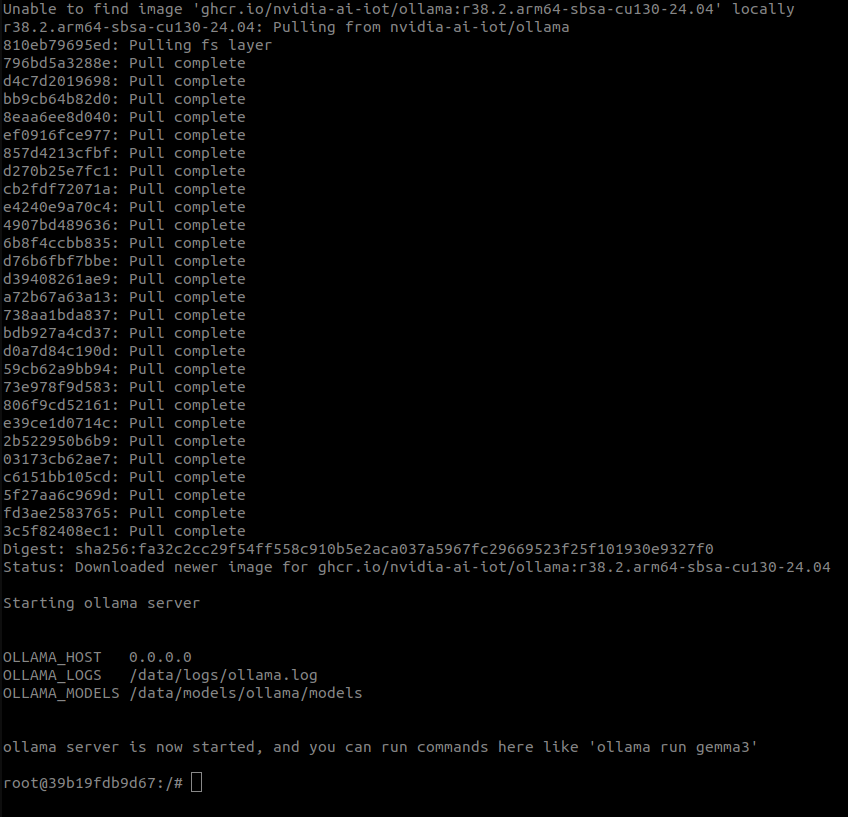

Next, we download the image from the GitHub Container Registry.

The ghcr.io prefix indicates that the image is hosted on the GitHub Container Registry.

To access other images or check for the latest updates, you can visit the following link.

It will take some time to pull (download) the container image.

Once in the container, you will see something like this.

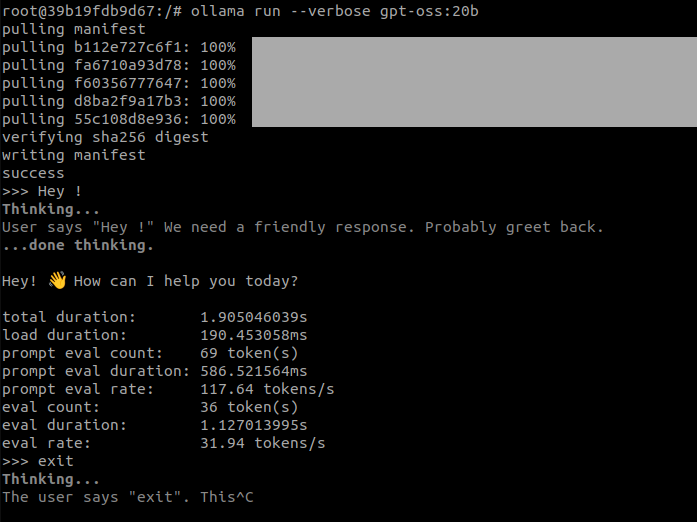

Try running a GPT OSS (20b parameter) model by issuing a command below.

Once ready, it will show something like this:

Troubleshooting

CUDA out of memory

If you encounter CUDA out of memory errors, try running a smaller model.

You can also use quantization to reduce memory usage and run models more efficiently on your device.

Different model sizes and quantized versions can be found here.

Installing OpenwebUI

Firsty run this command on terminal ;

If you see the “application startup” message on the screen, you can proceed to the next step.

If it says “retrying” and you don’t see any progress in the download section, stop the process with Control + C and try again or just wait. There should be no problem.

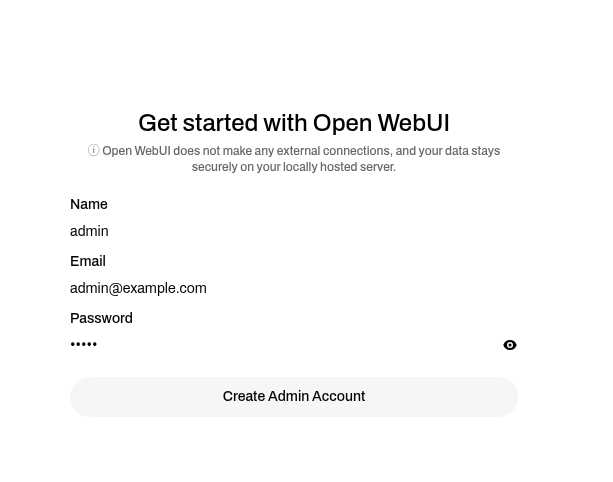

You can then navigate your browser to http://JETSON_IP:8080 , and create a fake account to log in (these credentials are only local). Instead of JETSON_IP, you can also use localhost.

Create an account .

⚠️ Be careful ! When OpenWebUI is launched, no model will appear in the Load Models section at the top left. To connect models to OpenWebUI, we need to assign a port. Restart the Ollama container with the following command:

You can check it by sending a curl request:

If you see “Ollama is running”, you can continue using it.

Which Jetson should I choose for my LLM model?

Below, you can find the RAM requirements of the most popular LLM models along with Jetson recommendations that meet the minimum specifications to run them. You can choose the one that best fits your needs.

| Model | Parameters | Quantization | Required RAM (GB) | Recommended Minimum Jetson |

|---|---|---|---|---|

| DeepSeek-R1 | 671B | Dynamic-1.58-bit (MoE 1.5-bit + other layers 4–6-bit) | 159.03 | Not supported (≥128 GB and above) |

| DeepSeek-R1 Distill-Qwen-1.5B | 1.5B | Q4_K_M | 0.90 | Jetson Orin Nano 4 GB, Jetson Nano 4 GB |

| DeepSeek-R1 Distill-Qwen-7B | 7B | Q5_K_M | 5.25 | Jetson Orin Nano 8 GB, Jetson Orin NX 8 GB, Jetson Xavier NX 8 GB |

| Qwen 2.5 | 14B | FP16 | 33.60 | Jetson AGX Orin 64 GB, Jetson AGX Xavier 64 GB |

| CodeLlama | 34B | Q4_K_M | 20.40 | Jetson AGX Orin 32 GB, Jetson AGX Xavier 32 GB |

| Llama 3.2 Vision | 90B | Q5_K_M | 67.50 | Jetson AGX Thor (T5000) 128 GB |

| Phi-3 | 3.8B | FP16 | 9.12 | Jetson Orin NX 16 GB |