Jetson Generative AI – Agent Studio

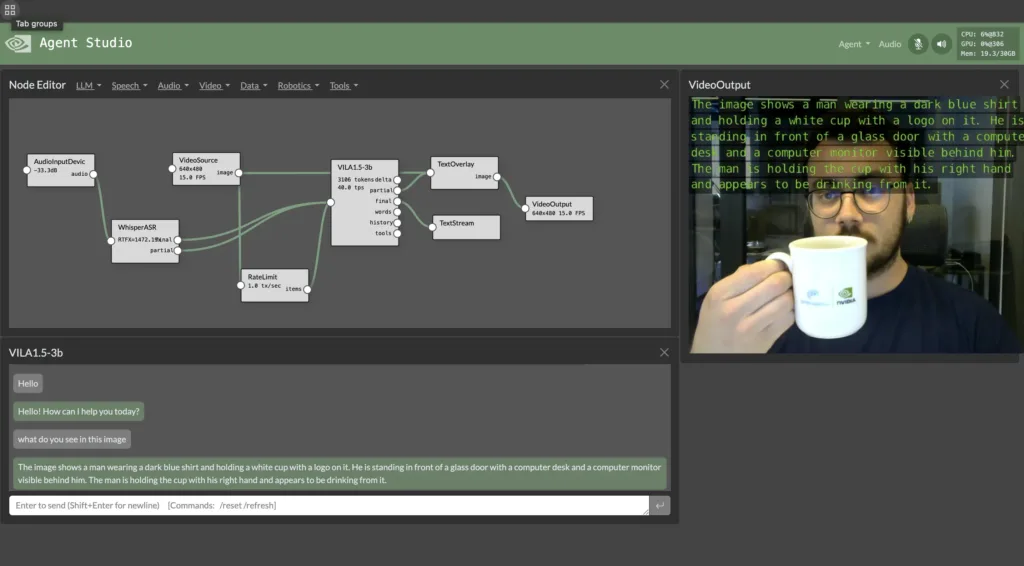

Rapidly design and experiment with creating your own automation agents, personal assistants, and edge AI systems in an interactive sandbox for connecting multimodal LLMs, speech and vision transformers, vector databases, prompt templating, and function calling to live sensors and I/O. Optimized for deployment onboard Jetson with on-device compute, low-latency streaming, and unified memory.

The pipeline above demonstrates a typical Agent Studio workflow: you speak to the model through speech recognition, ask questions about what the camera sees, and the system processes both video input and your voice commands through multimodal LLMs to provide intelligent responses back to you.

Features

- Edge LLM inference with quantization and KV caching ( NanoLLM )

- Realtime vision/language models (ala Live Llava and Video VILA )

- Speech recognition and synthesis (Whisper ASR, Piper TTS, Riva)

- Multimodal vector database from NanoDB

- Audio and video streaming (WebRTC, RTP, RTSP, V4L2)

- Performance monitoring and profiling

- Native bot-callable functions and agent tools

- Extensible plugins with auto-generated UI controls

- Save, load, and export pipeline presets

Requirements

|

Hardware / Software

|

Notes

|

|---|---|

| Jetson AI Kit / Dev Kit with ≥ 8 GB RAM | Orin AGX / Orin NX recommended for best latency |

| JetPack 6 (L4T r36.x) | Needed for latest pre-built containers |

| NVMe SSD | Highly recommended for storage speed and space |

| Hugging Face token | Needed for gated Meta-Llama weights |

Step-by-Step Setup

1. Clone the repository

2. Enter the repo and Install Dependencies

3. Launch Agent Studio

This will start the server running on your device.

Note: If you plan to use speech features (Whisper ASR, TTS, etc.), you’ll need to set up and start the Riva server first:

-

Download the Riva Quick Start scripts from NGC: riva_quickstart_arm64

-

Initialize and start Riva:

Wait until the Riva server is fully initialized before using speech plugins in Agent Studio.

4. Open the Web UI

You can then navigate your browser to https://IP_ADDRESS:8050 .

- You can load a preset at startup with the –load flag (like –load /data/nano_llm/presets/xyz.json)

- The default port is 8050, but can be changed with –web-port (and –ws-port for the websocket port)

Dev Mode

To make code changes without rebuilding the container, clone the NanoLLM sources and then mount them over /opt/NanoLLM.

You can then edit the source from outside the container. And in the terminal that starts, you can install other packages from apt/pip/ect.

Troubleshooting

Out-of-Memory Errors

If you encounter out-of-memory errors when loading models or running pipelines, this typically means your Jetson device doesn’t have enough RAM to handle the current configuration.

Solutions:

- Use smaller quantized models (4-bit instead of 8-bit)

- Clear the model cache using the “Clear Cache” button in the Agent menu

- Close other applications running on your Jetson

- Add swap space to your system:

Container Fails to Start

If the jetson-containers command fails to launch Agent Studio, this usually indicates a problem with your Docker setup or JetPack installation.

Solutions:

- Ensure you’re running JetPack 6 (L4T r36.x):

- Verify Docker daemon is running:

- Check if you have sufficient disk space (>25GB free)

- Restart your Jetson device and try again

Web UI Not Accessible

If you can’t access the Agent Studio web interface at https://IP_ADDRESS:8050, this is typically a network or firewall issue.

Solutions:

- Check if the service is actually running by looking for the “serving at” message in the terminal

- Verify port 8050 is not blocked by firewall (if you have ufw installed):

If you don’t have a firewall installed, you can skip this step.

- Try accessing from localhost first: https://localhost:8050.

- If accessing remotely, ensure you’re using the correct IP address of your Jetson.

Model Download Fails

When models fail to download, this is usually related to HuggingFace authentication or network connectivity.

Solutions:

- Verify your HuggingFace token is valid and properly exported:

- Ensure you have requested access to gated models (like Llama) on HuggingFace

- Check your internet connection and try again

- For persistent issues, try downloading models manually first

Camera Not Detected

If video input devices aren’t recognized by Agent Studio, this is typically a permissions or driver issue.

Solutions:

- Check available video devices:

- If no devices are found, check media devices:

- Verify camera hardware with v4l2-utils:

- Ensure your user has access to video devices:

- For CSI cameras on Jetson, you may need to enable the camera with:

- Restart the container after making permission changes

Audio Devices Not Working

Audio input/output issues can be related to different audio systems depending on what you’re using in Agent Studio.

For basic audio (PulseAudio):

- Check if PulseAudio is running:

(No output means it’s running correctly)

-

List available audio devices:

- Restart PulseAudio if needed:

-

Ensure your user is in the audio group:

For speech features (Whisper ASR, TTS, etc.):

- Make sure the Riva server is up and running using the official Quick Start scripts:

- If speech plugins aren’t working, check that the Riva server is still running.

Slow Performance

If Agent Studio is running slowly or with high latency, this usually indicates resource constraints or suboptimal configuration.

Solutions:

- Use quantized models (4-bit quantization provides best speed/quality balance)

- Enable GPU acceleration and ensure CUDA is properly configured

- Use an NVMe SSD for faster model loading and data access

- Reduce model complexity or use smaller models

- Monitor system resources in the Agent Studio UI and adjust accordingly

- Use the RateLimiter plugin to throttle data sources and balance resources

Plugin Connections Fail

When you can’t connect plugins or data isn’t flowing between them, this is usually a data type mismatch or configuration issue.

Solutions:

- Verify that output data types from one plugin match the input requirements of the next

- Check plugin documentation for supported input/output formats

- Ensure plugins are properly configured with required parameters

- Try connecting plugins step by step to isolate the problematic connection

- Restart the pipeline or clear cache if connections become unstable

For more information about NanoLLM and advanced configurations, visit the NanoLLM GitHub repository.