Jetson Generative AI – Flowise

Flowise is an open-source, low-code tool for building customized LLM applications and AI agents. Flowise is designed to let anyone build powerful AI-driven solutions without writing a single line of code!

Why Flowise on Jetson?

Flowise vs n8n: Choosing the Right Tool

At first glance, Flowise and n8n might seem pretty similar – both offer visual workflow builders and can handle AI tasks. But when you dig deeper, they each have their own advantages and disadvantages that make them better suited for different types of projects.

Core Philosophy Difference

Flowise: AI-first platform specializing in LLM workflows

n8n: General-purpose automation platform that can do AI

| Capability | Flowise | n8n |

|---|---|---|

| AI Focus | LLM-optimized with built-in prompt engineering | 300+ integrations, AI as add-on |

| Learning Curve | Easier for AI newcomers | Better for developers |

| Code Execution | Limited, component-focused | Full JavaScript/Python support |

| Performance | Good for moderate AI workloads | Enterprise-grade with scaling |

| Templates | AI-focused (RAG, Agents, Research) | 800+ general automation templates |

Choose Flowise When You:

Want rapid AI prototyping – Get chatbots running in minutes

Focus on conversational AI – Built-in conversation management

Need AI-specific tools – Native LangChain/LlamaIndex integration

Prefer simplicity – Lower learning curve for LLM projects

Choose n8n When You:

Need enterprise integrations – Connect AI with existing business systems

Want code flexibility – Custom JavaScript/Python execution

Require complex workflows – Advanced scraping, data processing

Build beyond AI – General automation across multiple systems

For the Jetson:

For edge AI applications, Flowise’s specialization often wins because:

- Local LLM integration is seamless (Ollama support)

- Rapid iteration matters more than complex integrations

- AI-first design reduces development complexity

- Template marketplace accelerates deployment

If your Jetson project is primarily about AI/LLM applications, Flowise gets you there faster. If you need AI as part of broader system automation, n8n provides more flexibility.

Installation Methods

You have a few different ways to get Flowise running on your Jetson. Docker installation is probably your best bet since it’s the most straightforward and handles all the dependencies for you. If you prefer more control, you can do a local Node.js installation. There’s also the option of cloud deployment if you need external access, though that defeats some of the edge computing benefits.

Docker Installation

Note: Ensure your Jetson has Docker installed. For more information visit https://docs.docker.com/engine/install/ .

Web UI: http://localhost:3000

Local Installation

Note: Ensure Node.js is installed on your Jetson (Node v18.15.0 or v20 is supported).

1. Install Node.js on Jetson:

Quick Start

2 .Install Flowise:

3 .Start Flowise:

Open: http://localhost:3000

Getting Started

First Setup

- Access Flowise:

- Open browser to

http://your-jetson-ip:3000 - Login with configured credentials

- Open browser to

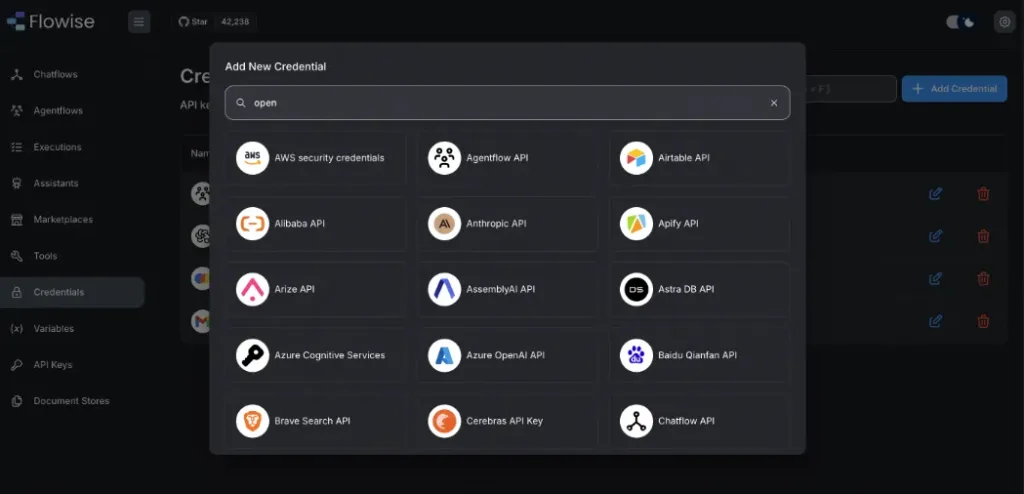

- Configure API Keys:

- Go to “Credentials” section

- Add your LLM provider API keys (OpenAI, Anthropic, etc.)

Flowise Management Features

Once you’re inside Flowise, you’ll find all the management tools neatly organized in the sidebar. The interface is pretty intuitive – you’ve got your Chatflows for basic conversational AI, Agentflows for more complex multi-agent setups, and a Marketplace full of templates from the community.

There are separate sections for Tools and utility functions, Assistants for managing your deployed AI instances, and Executions where you can see how all your workflows are performing. The Document Stores section is where you upload files for your knowledge base, while API Keys and Credentials handle all your authentication securely.

What’s really nice is how everything ties together. You can track execution performance across all your workflows, manage variables globally so you don’t have to configure the same things over and over, and organize your documents with proper version control. Plus, all your API keys and secrets are encrypted and stored securely.

Building Advanced Agent Workflows

For more complex applications, Flowise also supports advanced agent workflows that can handle multi-step reasoning and autonomous decision-making:

Main Flowise dashboard showing sidebar navigation, management options, and credentials setup.

Local LLM Setup (Recommended for Jetson)

Pull AI models into your separate Ollama container:

Note: Choose models based on your Jetson’s RAM – use 3B models for 4-8GB RAM, 7B-8B models for 16GB+ RAM.

Template Marketplace

Pre-Built Templates for Quick Start

Access Marketplace: Click “Marketplaces” in the sidebar and use filters to find relevant templates. Click on the template you want to use and select “Use Template”. Modify nodes and connections for your specific needs.

Building Your First Chatbot

You can start from Template (Recommended)

- Navigate to Marketplace: Go to “Marketplaces” → “Community Templates”

- Select Template: Choose “Basic” or “Customer Support” template

- Import and Customize: Click template → modify for your needs

- Configure Ollama: Update Chat Model nodes to use

http://localhost:11434

Or you can build from scratch

Step 1: Create New Chatflow

- Go to “Chatflows” tab

- Click “Add New”

- Name your chatflow

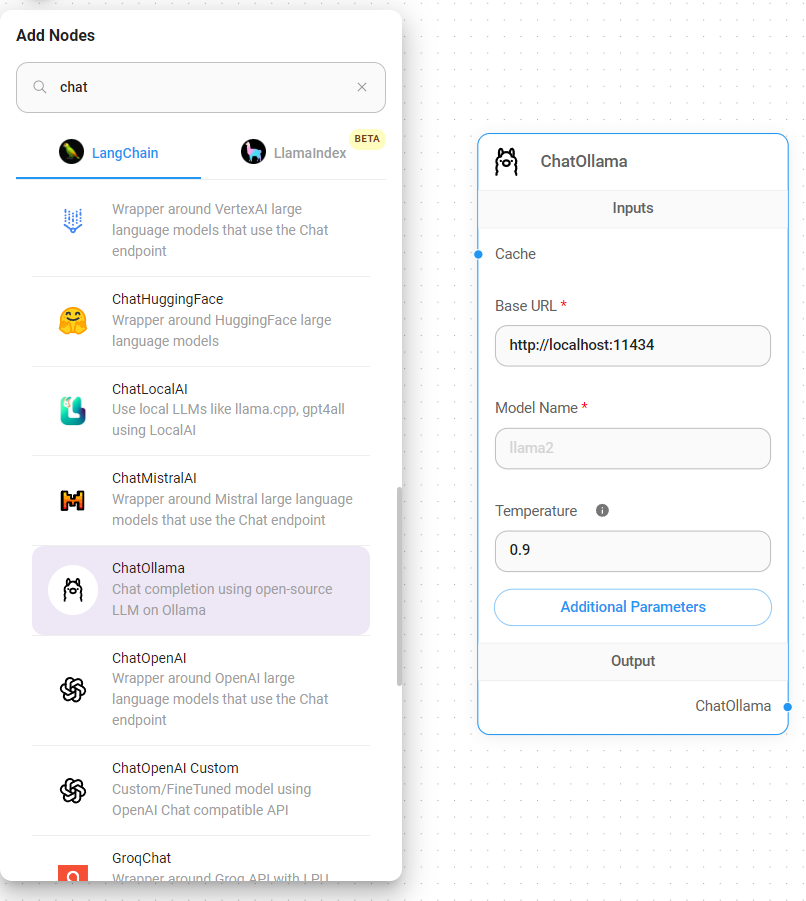

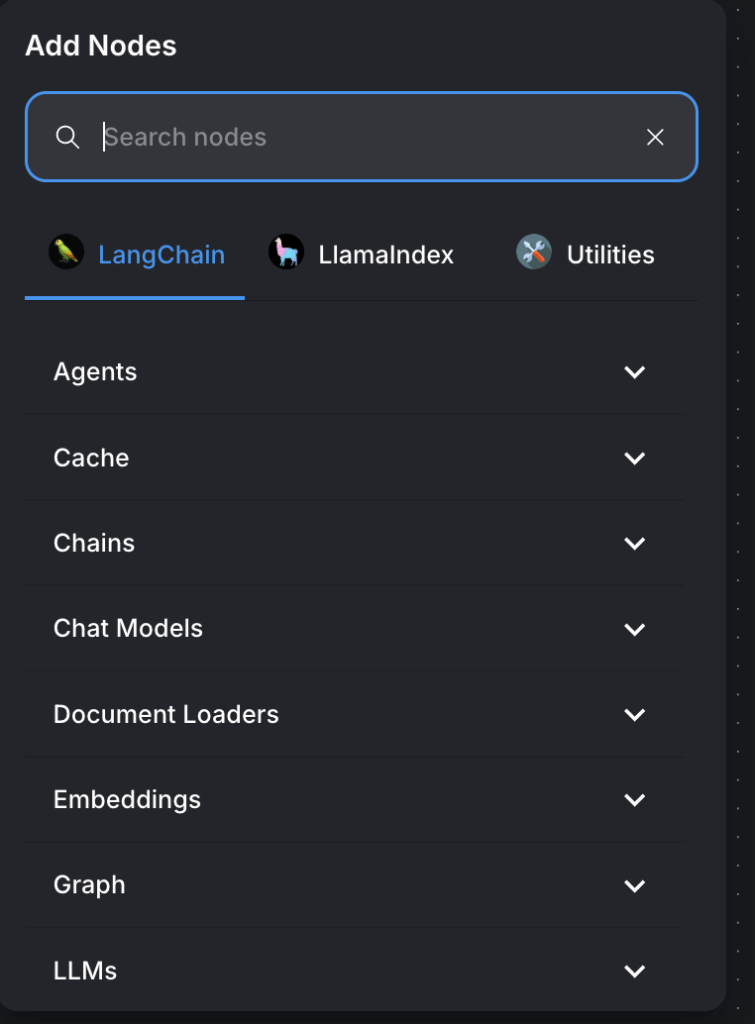

And you can add nodes from Categories

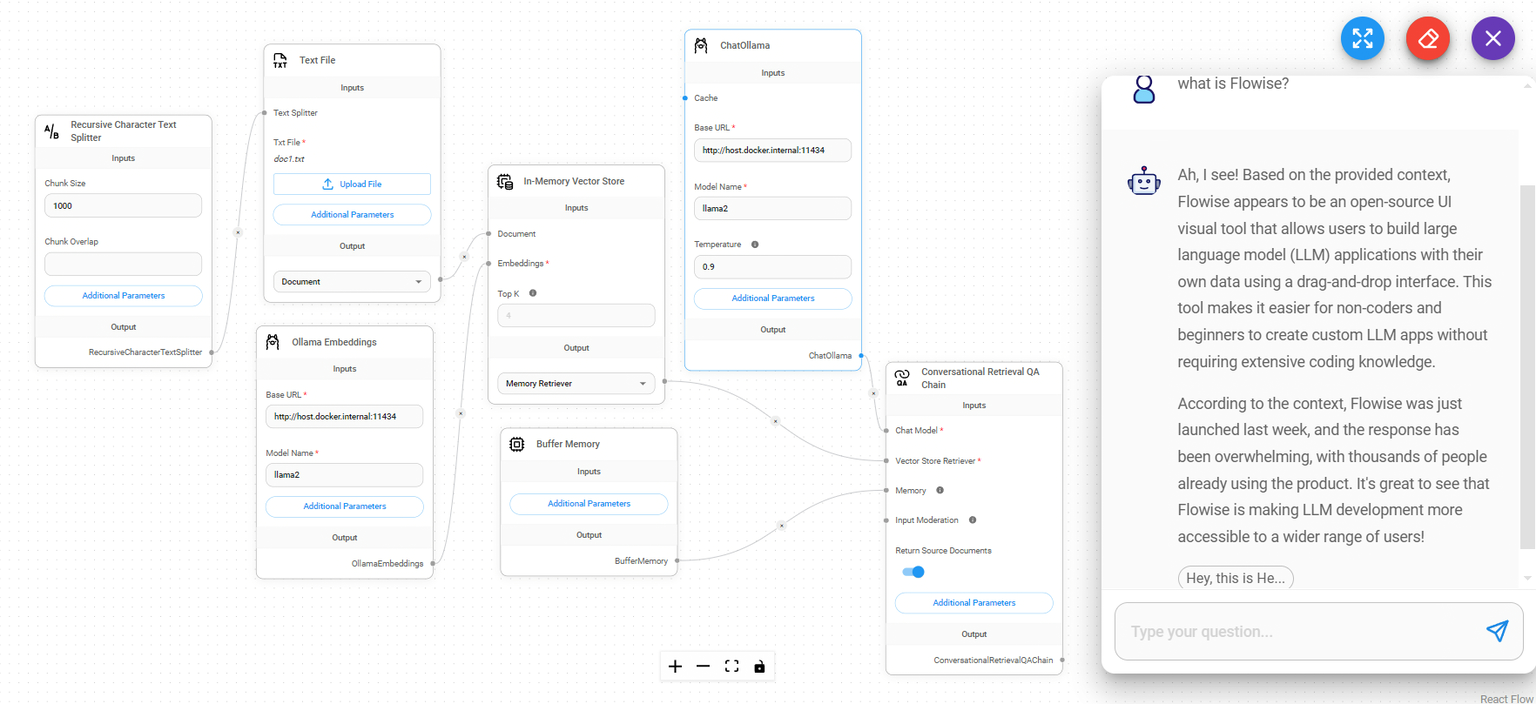

When you’re building workflows, you’ll see different node categories organized logically. LangChain nodes give you the core components and chains, while LlamaIndex nodes are specifically for RAG applications. There are Utility nodes for helper functions, Agent nodes for autonomous components, and Chat Model nodes that connect to different LLMs like Ollama or OpenAI. You’ll also find Document Loaders for processing files and Embedding nodes for vector operations.

Configure Components

When configuring your Chat Ollama node, set the model name to something like llama3.2:3b, keep the temperature around 0.7 for good creativity, and point the Base URL to http://localhost:11434 where your Ollama container is running.

For the Conversation Chain, you’ll connect your Chat Model input and add a system prompt to give your AI some context about what it should do. Here’s a good starting prompt:

Test and Deploy

Once you’ve got everything configured, hit the save button and use the chat panel to test your bot. Make sure it’s responding the way you want, then you can use Flowise’s deployment options to get it ready for production use.

Deployment

There are 5 options for deployment (</> button). Flowise makes it really easy to embed your chatbots into websites. You can choose from a popup widget that floats on your page, a fullpage dedicated chat interface, or if you’re using React, there are specific React components for both popup and fullpage implementations.

The embedding code is pretty straightforward – just import the Flowise embed script and initialize it with your chatflow ID:

You also get some nice advanced features like direct public links for sharing, custom authentication if you need access controls, theme customization to match your brand, and custom JavaScript event handling for more complex integrations.

Troubleshooting

Common Issues You Might Run Into

Node.js Version Problems: If you’re getting errors about Node.js being too old, you’ll need to update it. Run these commands to get a newer version:

Memory Issues: Running out of memory with “JavaScript heap out of memory” errors? Increase the memory limit before starting Flowise:

GPU Not Working: If your GPU isn’t being detected, check the status with tegrastats and make sure Docker has the NVIDIA runtime enabled by restarting the service: sudo systemctl restart docker

Port Conflicts: If port 3000 is already in use, find what’s using it with sudo lsof -i :3000, kill that process with sudo kill -9 , or just start Flowise on a different port: flowise start –PORT=3001

Permission Problems: File permission issues? Fix them with:

Docker Issues

If you’re running Flowise in Docker and having problems, here are some quick fixes:

Check what’s happening with docker logs flowise, restart the container with docker restart flowise, or see all container statuses with docker ps -a. If things are really broken, you can remove the container completely with docker rm -f flowise and recreate it with your original docker run command.

Performance Problems

Slow responses? Try using local models instead of API calls, reduce the context window size, or optimize your RAG chunk sizes if you’re doing document processing.

Memory usage too high? Reduce the buffer window memory size, switch to smaller models, or clear your browser cache which sometimes helps.

Getting Help

If you’re stuck, the Flowise community is pretty active. Check out the GitHub Issues for bug reports and feature requests. The official documentation is also quite comprehensive. For Jetson-specific issues, the NVIDIA Developer Forums are your best bet.