Jetson Generative AI – Jetson Copilot

Large Language Models become exponentially more powerful when combined with Retrieval-Augmented Generation (RAG)—Jetson Copilot demonstrates how to run open-source LLMs locally with access to your indexed knowledge base, creating a personalized AI assistant that understands your specific domain and documents in real time on your Jetson device.

In this article you’ll learn how to set up and run Jetson Copilot, a reference application that combines local LLM inference with RAG capabilities for intelligent document search and question answering.

Requirements

| Hardware / Software | Notes |

|---|---|

| Jetson AGX Orin (64GB) |

Recommended for best performance

|

|

Jetson AGX Orin (32GB)

|

Good performance for most use cases

|

|

Jetson Orin Nano (8GB)

|

Minimum requirement

|

|

JetPack 5 (L4T r35.x) JetPack 6 (L4T r36.x)

|

Both versions supported

|

|

NVMe SSD highly recommended

|

For storage speed and space

|

|

6GB for jetrag container

|

Container image storage

|

|

~4GB for default models

|

llama3 and mxbai-embed-large models

|

What is Jetson Copilot?

Jetson Copilot is a reference application for a local AI assistant that demonstrates:

- Running open-source LLMs (large language models) on device

- RAG (retrieval-augmented generation) to let LLM access your locally indexed knowledge

- Document indexing and search for personalized knowledge bases

- Web-based interface for easy interaction and management

Note: You do not need jetson-containers installed on your system. Jetson Copilot uses the jetrag container image that is managed and built separately.

Step-by-Step Setup

1. Clone the repository

2. Enter the directory

3. First-time environment setup

If this is your first time running Jetson Copilot, run the setup script to ensure all necessary software is installed:

4. Launch Jetson Copilot

5. Access the Web Interface

The console will show two access options:

Local Access (on Jetson):

Network Access (from other devices)

Tip: On Ubuntu Desktop, a frameless Chromium window will automatically open to make it look like a standalone application.

How to Use Jetson Copilot

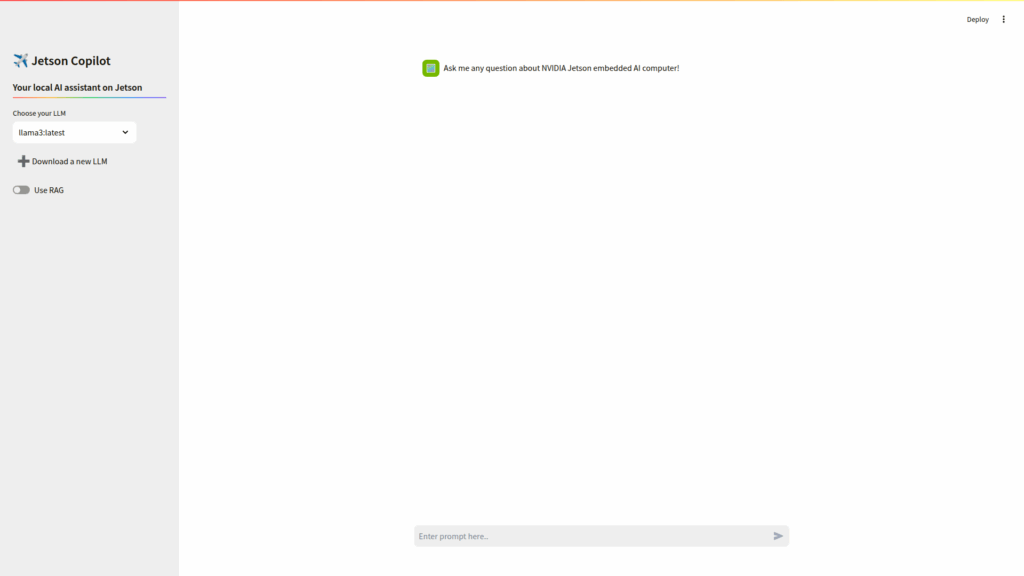

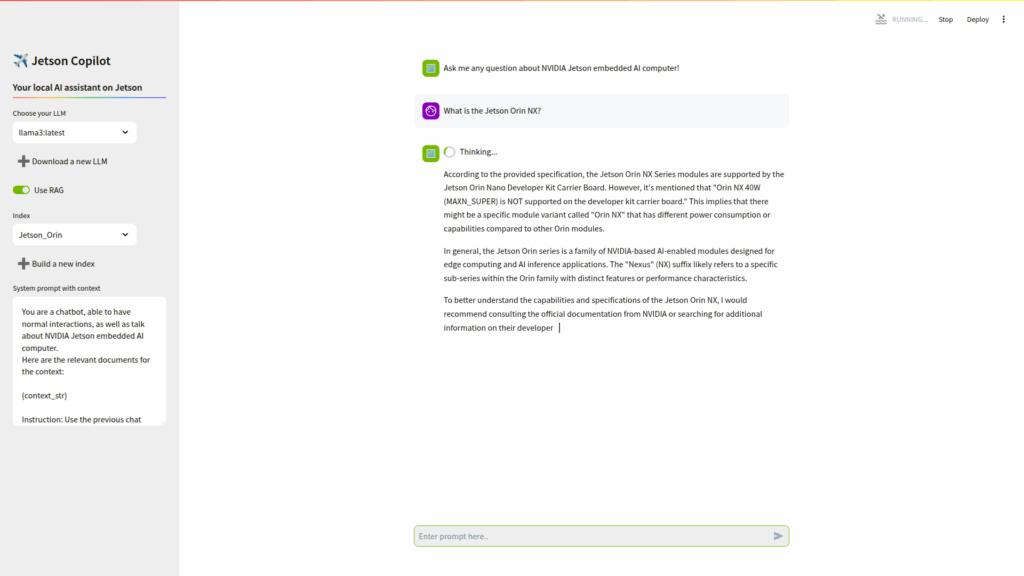

1. Basic LLM Interaction

You can use Jetson Copilot as a standalone LLM without enabling RAG features. By default, Llama3 (8B) is downloaded on first run and used as the default model. This provides impressive capabilities but may have limitations regarding:

- Information after its training cutoff date

- Domain-specific or personal knowledge

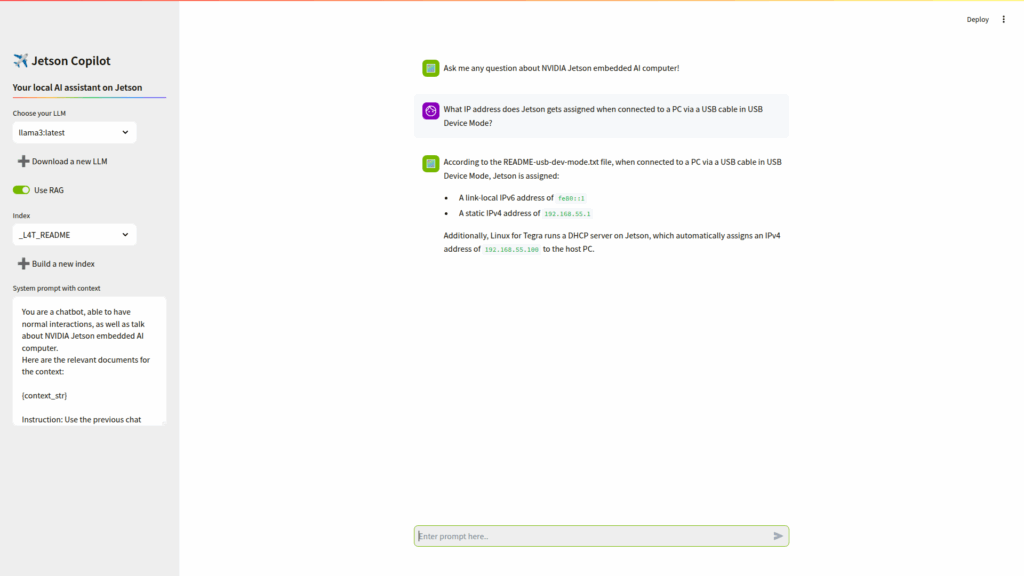

2. Using Pre-built Knowledge Index

To enable RAG capabilities:

- “Toggle “Use RAG” in the side panel

- Select an index under the “Index” dropdown

- _L4T_README index is provided as a demonstration

- Built from README files in the L4T-README folder on Jetson desktopMounted at `/media//L4T-README/` after running:

Example Questions:

What IP address does Jetson get assigned when connected to a PC via USB cable in USB Device Mode?

3. Building Your Own Knowledge Index

Step 3.1 Prepare Your Documents

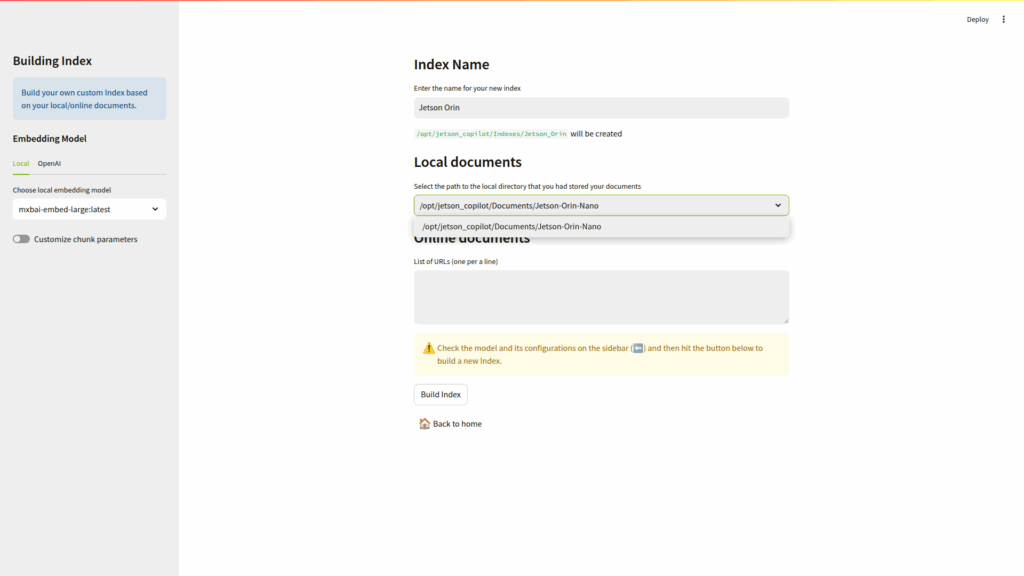

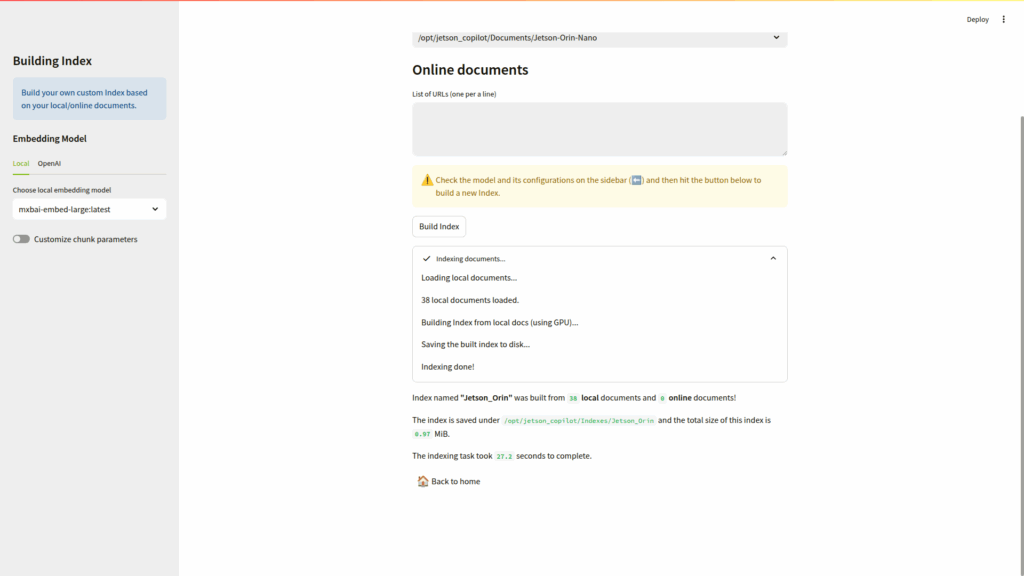

Step 3.2 Build the Index via Web Interface

- Open the sidebar and toggle on “Use RAG”

- Click “➕Build a new index” to access the Build Index page

- Give your index a name (e.g., “JON Carrier Board”)

- Select your document directory from the dropdown (e.g., `/opt/jetson_copilot/Documents/Jetson-Orin-Nano`)

- Add online URLs (optional) – one URL per line in the text area

- Ensure mxbai-embed-large is selected as the embedding model

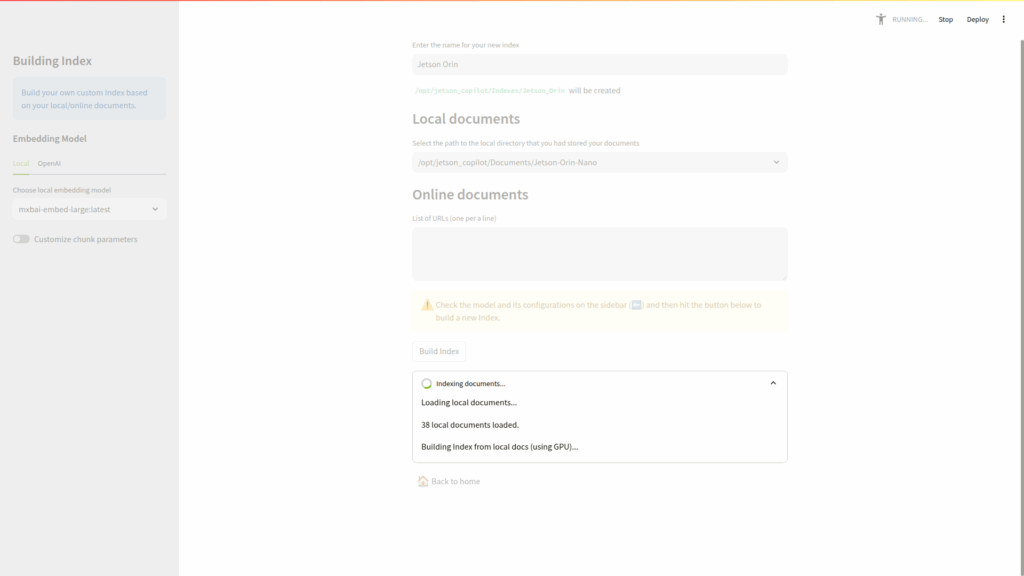

- Click “Build Index” and monitor progress in the status dropdown

Step 3.3 Use Your Custom Index

Once built, return to the home screen and select your newly created index from the dropdown.

Advanced Features

Different LLM Models

You can test and switch between different language models:

- Llama3 (8B) – Default, good balance of performance and accuracy

- Additional models can be downloaded via the Ollama interface

- Model selection available in the web interface settings

Different Embedding Models

For RAG functionality, you can choose embedding models:

- mxbai-embed-large – Recommended default

- OpenAI embedding models – Experimental support (needs testing)

Document Types Supported

Jetson Copilot can index various document formats:

- PDF files

- Text files

- Markdown files

- **Web pages** (via URL ingestion)

Development and Customization

Development Mode

For developers wanting to modify the Streamlit application:

- Enable auto-rerun in the web UI (top-right corner)

- Choose “Always rerun” to automatically update when you change source code

Manual Development Setup

Once inside the container:

Directory Structure

Troubleshooting

| Issue | Fix |

|---|---|

|

Container fails to start

|

Ensure Docker is properly installed via `./setup_environment.sh`

|

|

Models not downloading

|

Check internet connection and available disk space (>10GB)

|

|

Chromium window won’t close

|

Manually close Chromium window; stopping container doesn’t close browser

|

|

RAG not working

|

Verify index is built and selected, check embedding model

|

|

Out of memory error

|

Close other applications, consider using smaller model

|

For more information about Jetson Copilot and advanced configurations, visit the Jetson Copilot GitHub repository.