Jetson Generative AI – n8n Local Agents

n8n transforms your Jetson into an intelligent agent factory with its visual workflow automation platform. This fair code licensed tool with over 123k GitHub stars (as of July) enables you to create autonomous agents that think, decide, and can act locally using local LLMs, Ollama or using external services. You can build RAG pipelines, sophisticated AI agents that can monitor systems, process data, make decisions, and execute actions by only using n8n workflow.

Requirements

| Hardware / Software | Notes |

|---|---|

| Any Jetson (Nano/Orin) ≥ 4 GB RAM | 16GB+ recommended for complex workflows |

| NVMe SSD | Recommended for workflow data storage |

Step-by-Step Setup

1. Create Necessary Directories

2. Launch n8n

3. Access the Web Interface

Once the container starts, you’ll see:

Editor is now accessible via:

http://localhost:5678

- Local access: Open http://localhost:5678 in your browser

- Remote access: Use http://<jetson-ip>:5678

4. Set up Ollama (Separate Container)

In a new terminal, start Ollama:

5. Set up Ollama Models

Pull AI models into your separate Ollama container:

Model Size Guide for Jetson:

- 4-8GB RAM: Use 3B models (llama3.2:3b)

- 16GB+ RAM: Can handle 7B-8B models

- 32GB+ RAM: Can run multiple large models simultaneously

6. Configure Ollama Connection in n8n

- In n8n workflows:

- Add “Ollama Chat Model” node to any workflow

- Set base URL to

http://localhost:11434 - Select your pulled model from dropdown (e.g., llama3.2:3b)

- Test the connection

- Create credentials (if needed):

- Go to Settings > Credentials

- Add “Ollama” credential

- Base URL:

http://localhost:11434

Note: Since both containers use --network=host, they can communicate via localhost:11434

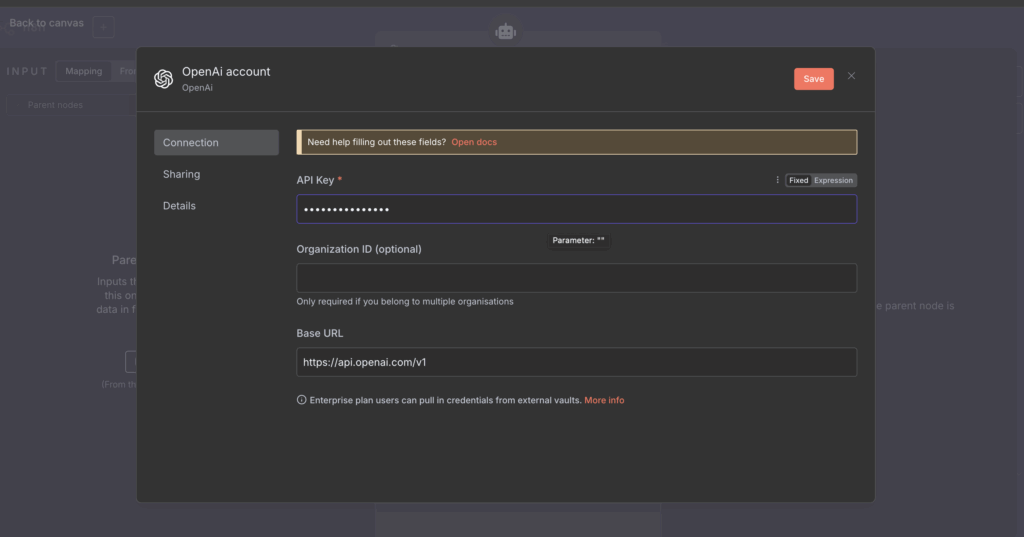

7. Use external services

If youre going to use an external service like OpenAI you can add the node and then add its credentials.

8. Explore Community Workflows and Templates

n8n has a rich ecosystem of community-contributed workflows that you can use as starting points:

- Official Template Gallery: Visit n8n.io/workflows to browse 800+ workflow templates

- GitHub Community: Search GitHub for “n8n-workflow” to find community contributions

- Template Categories:

- AI Agent Chat workflows

- Content creation automation

- Social media management

- Email processing with AI

- Data transformation pipelines

- Slack/Discord bots

How to use templates:

- Browse templates at n8n.io/workflows

- Click “Use for free” on any template

- You can copy the JSON file and paste it into the workflow or save it as a JSON file and upload it

- Customize nodes and credentials to match your setup

Popular AI templates to try:

9. Advanced agent features

You can created advanced workflows with n8n using:

- Interactive Chat Agents: Build conversational AI with Chat Trigger nodes for real-time user interactions

- File Processing Intelligence: Load schemas, extract data from files, and combine with AI for document analysis

- Memory Management: Use Window Buffer Memory to maintain conversation context across multiple interactions

- Multi-step reasoning: Chain multiple LLM operations for complex decisions and data processing

- Dynamic Data Combination: Merge schema data with chat inputs for context-aware responses

- Conditional logic: Route agent workflows based on AI-generated decisions and user inputs

- Error handling: Build robust failure recovery into agent behavior

- Local AI integration: Combine multiple local models for specialized tasks like SQL generation, data analysis

E.g Complex agent workflow to generate SQL queries from schema using local LLM reasoning ;

Troubleshooting & Common Issues

“Model not found” in Ollama node

This error occurs when you try to use a model in n8n that hasn’t been downloaded to your Ollama container yet.

Solution: Download the model first by running:

For example, to pull Llama 3.2 3B model:

Connection refused

This happens when n8n cannot connect to the Ollama service, usually due to network configuration issues.

Solutions:

- Ensure both containers are running with

--network=hostflag - Verify Ollama is accessible by testing:

curl http://localhost:11434/api/tags - For Mac users, use

host.docker.internal:11434instead oflocalhost:11434 - Check if Ollama container is running:

docker ps

“Pull model manifest: 412” error

This error typically occurs when using outdated Docker images or when there are authentication issues with model repositories.

Solutions:

- Update to the latest Docker images:

- Clear Docker cache and restart containers

- Check your internet connection and firewall settings

- Verify the model name is correct and still available in the Ollama library

For comprehensive guides and documentation, visit docs.n8n.io. The platform offers extensive customization options and community support for automation workflows.