Jetson Generative AI – NanoOwl

Vision Transformers (ViT) apply transformer architectures—originally made for natural language processing—to visual data such as images and video. For more detail on ViT, check out the video linked in the original post. In this article, you’ll learn how to run OWL‑ViT (called NanoOWL) on a Jetson device in real time using NVIDIA TensorRT.

Requirements

- A Jetson AI Kit or Developer Kit with at least 8GB of memory that supports JetPack 5 or 6 versions.

- A USB camera

Step‑by‑Step Setup

1. To start working on it, clone the Git repository to your Jetson using the command below.

2. Navigate to the directory of the cloned repository.

3. Update and install pip3:

4. Run the command below to install the packages and libraries used by NanoOwl.

5. You can test the connection of the camera attached to the Jetson using the command below. If you don’t see any video* output, check the camera connections and restart the Jetson.

6. While inside the cloned repository (jetson-containers), run the command below. It will download and set up the required Docker images, so when prompted for docker pull, proceed by typing “yes” or “y”.

Once the Docker image is downloaded and installed, the terminal will automatically open inside the container.

8. Navigate to the example code directory:

9. Start the tree_demo application:

10. When you see the line Running on http://0.0.0.0:7860, the sample application has started.

If you are operating directly on the Jetson, you can paste this address into a browser to view the live camera stream. If you’re connected to Jetson via SSH from another device, copy and paste the command below into a browser on that device to access the live camera stream.

In the command, replace “ip address” with the actual IP address of your Jetson.

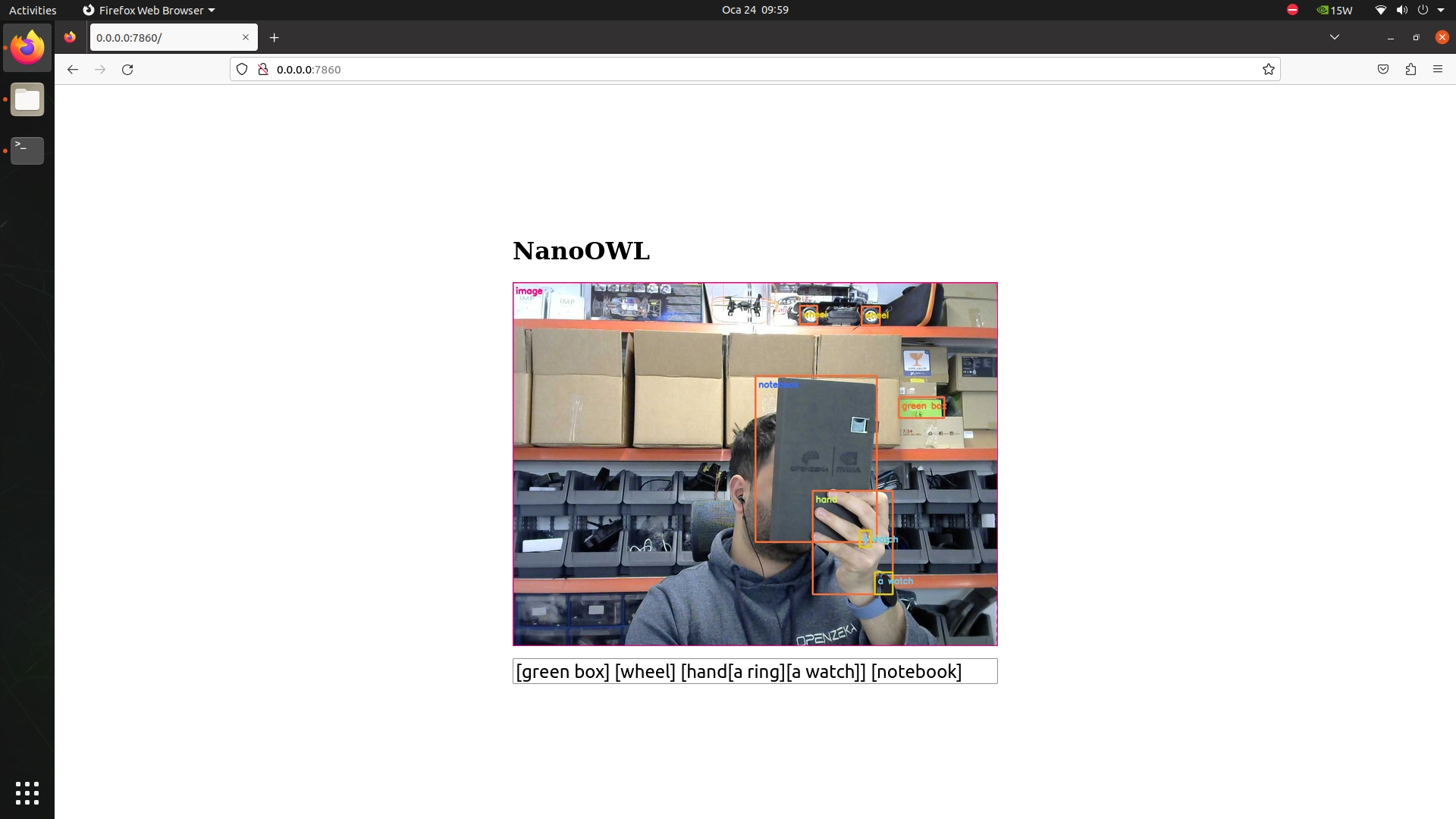

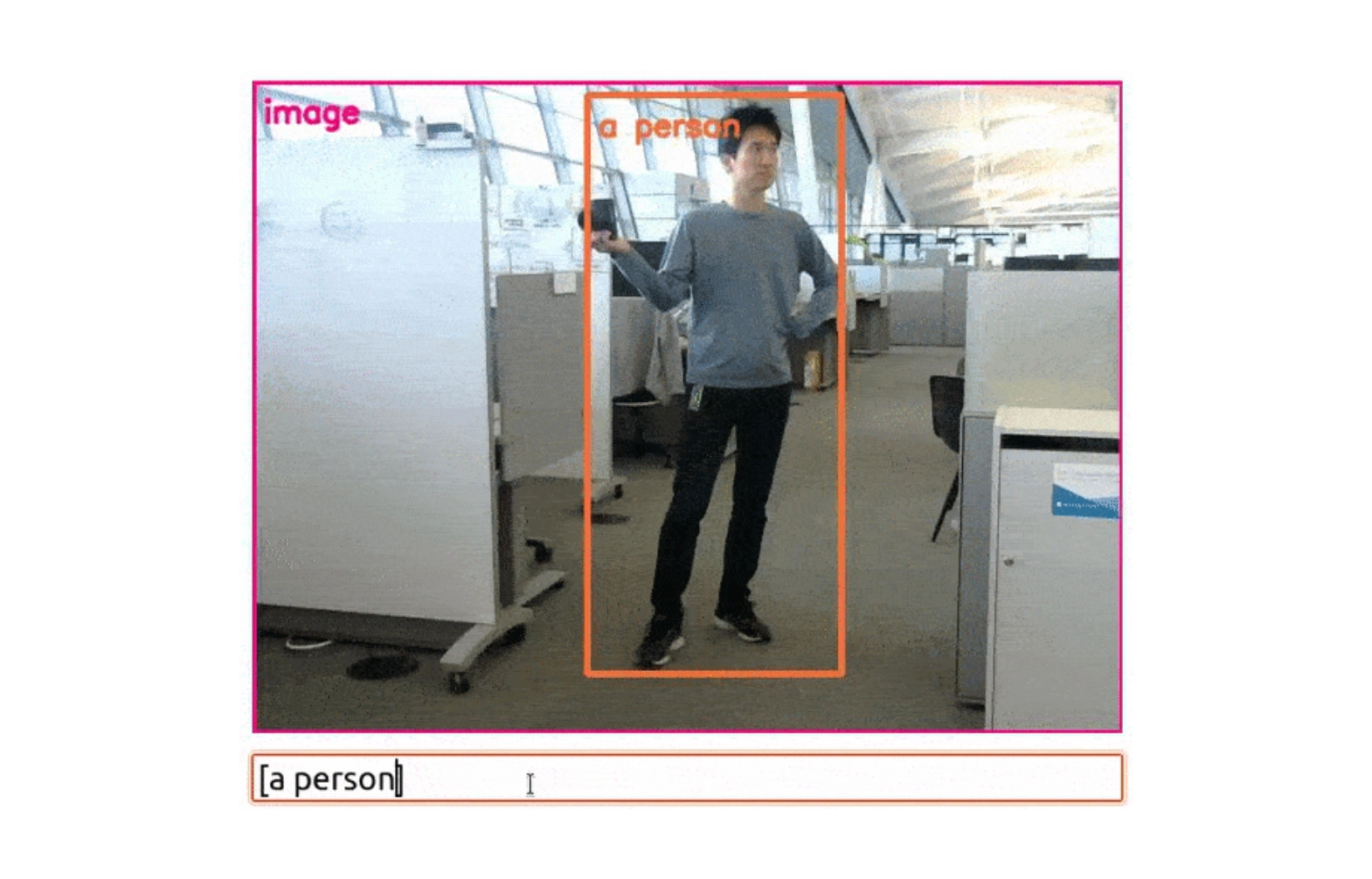

The image below shows the output from our test setup. The prompts you enter are applied to the live camera feed in real time.